Topic: cc-wiki-dump

Stack Exchange Data Explorer 2.0

It has been a year and a half since we launched Data Explorer. In the past few months Tim Stone (on a community grant) and I have pushed a major round of changes. Thanks Tim!

Recap on last years changes

Since we publicly launched data explorer, the most notable change contributed back from the community was support for query plans, big thanks to Justin for submitting the patch.

We also added quite a few bug fixes/features, mostly around merging users. Some features were added to defend data.se against an onslaught of public queries. A few features were added to support non Stack Exchange data dumps, most notably a system for white listing. Our very own Rebecca Chernoff ported Data Explorer to ASP.NET MVC3 amongst many other fixes.

The current round of changes offers some very cool new functionality, which is worth listing:

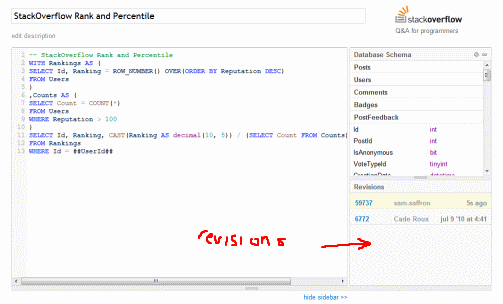

Query revisions

When we created Data Explorer there was no way to track a query’s “lineage”. This was particularly problematic because we had no way of updating featured queries or shared queries. Even I complained about this on meta.

The new pipeline works just like Gist, you can track the history of your query as you are editing (attributing the various editors along the way):

You can link to a specific revision, or simply share a “query set” by using the permalink. By sharing a “query set” you can later on fix up any issues the query has, without needing to update the link. The new pipeline allows you to “fork” any query created by other users and tracks attribution along the way.

Graphs

We added some basic graphing facilities, supporting 2 types of line graphs:

The first type is a simple graph, where the first column represents the X-axis and the other columns the data points. For example: a graph of questions and answers per month.

The second type is a bit trickier, it unpivots the second column in the result set. For example: a graph of questions per tag for top 10 tags.

Huge open source upgrade

Data Explorer consumes a fair amount of open source libraries. In the past year and a half many have evolved. We took the time to upgrade them all.

The excellent Code Mirror was updated to the 2.0 version, the new version no longer uses messy iframes. Marjin wrote a great post explaining the changes, a fantastic read for any JavaScript developers.

SlickGrid, which in my opinion is the best grid control built on jQuery, was upgraded to version infinity.

100% more Dapper

Dapper our open source micro ORM is the only ORM Data Explorer uses. We took the time to port the entire solution to Dapper. I even added a few CRUD helpers so you are not stuck hard coding INSERT and UPDATE statements everywhere.

Data Explorer is a good open source example of how we code web sites at Stack Overflow. It is built on our stack using many of our helpers. Dapper and related helpers are used for data access. It uses the same homebrew migration system we use in production and an interesting asset packaging system I wrote (for the record, Ben wrote a much more awesome one that we use in production, lobby him to get it blogged). It also uses MiniProfiler for profiling. MiniProfiler is even enabled in production, so go have a play.

Lots of smaller less notable fixes

- We now have a concept of “user preferences”, so we can remember which tab you selected, etc.

- We remember the page you were at and try to redirect you there after you log on.

- We attribute the query properly to the creator / editor from the query show page.

- You can page through your queries on your user page.

- Support for arbitrary hyperlinks

- Revamped object browser, you can collapse table definitions

- Lots of other stuff I forgot :)

You too can run Data Explorer

At Stack Exchange we run 3 different instances of Data Explorer. We have the public Data Explorer and a couple of private instances we use to explore other data sets. The first private instance is used for raw site database access. The other is used to browse through our haproxy logs.

There is nothing forcing you to point Data Explorer at a Stack Exchange data dump, the vast majority of the features work fine pointed at an arbitrary database.

Hope you enjoy this round of changes.

If there are any bugs or feature requests please post them to Meta Stack Overflow. Data Explorer is open source, patches welcome.

Creative Commons Data Dump Sep ’11

While we will always continue to produce Stack Exchange creative commons data dumps, we are moving to a quarterly schedule for all future dumps. We won’t be blogging each and every one, as it gets a bit monotonous. Please subscribe to our Clear Bits creator feed to be automatically notified when new Stack Exchange creative commons data dumps become available!

The latest version of the Stack Exchange Creative Commons Data Dump is now available. This reflects all public data in …

- Stack Overflow

- Server Fault

- Super User

- Stack Apps

- all public non-beta Stack Exchange Sites

- all corresponding meta sites

… up to September 2011.

This month’s Stack Exchange data dump, as always, is hosted at ClearBits! You can subscribe via RSS to be notified every time a new dump is available.

If you’d prefer not to download the torrent and would rather play with the most recent public data in your web browser right now, check out our open source Stack Exchange Data Explorer.

Have fun remixing and reusing; all we ask is for proper attribution.

Creative Commons Data Dump Jan ’11

IMPORTANT: This torrent was originally uploaded incomplete. Our apologies. If you downloaded it before ~ 8 pm Pacific on January 16th, 2011, you should re-download it now. The correct size is > 3 GB; anything smaller is incorrect.

The latest version of the Stack Exchange Creative Commons Data Dump is now available. This reflects all public data in …

- Stack Overflow

- Server Fault

- Super User

- Stack Apps

- all public non-beta Stack Exchange Sites

- all corresponding meta sites

… up to Jan 2011.

This month’s Stack Exchange data dump, as always, is hosted at ClearBits! You can subscribe via RSS to be notified every time a new dump is available.

Please read, this is not the usual yadda yadda! Three things:

- Because the dumps are quite a bit of work for us, we’re moving to a tri-monthly schedule instead of monthly. Meaning, you can expect dumps every three months instead of every month. If you have an urgent need for more timely data than this, contact us directly, or use the Stack Exchange Data Explorer, which will continue to be updated monthly.

- The attribution rules have changed to forbid JavaScript generated attribution links.

- As of November 2010, we enhanced the format of the data dump to include more requested fields, full revision history, and many other pending meta requests tagged [data-dump]. That’s why the dump is so much larger, but we did break it out in individual files per site within the torrent, so you can download just the files you need.

If you’d prefer not to download the torrent and would rather play with this month’s data dump in your web browser right now, check out our open source Stack Exchange Data Explorer. Please note that it may take a few days for the SEDE to be updated with the latest dump.

Have fun remixing and reusing; all we ask is for proper attribution.

Re-Launching Stack Exchange Data Explorer

Since we launched the Stack Exchange Data Explorer in June, we’ve been actively maintaining it and making small improvements to it. But there is one big change — as of today, the site has permanently moved from odata.stackexchange.com to

data.stackexchange.com

If you’re wondering what the heck this thing is, do read the introductory blog post, but in summary:

Stack Exchange Data Explorer is a web tool for sharing, querying, and analyzing the Creative Commons data from every website in the Stack Exchange network. It’s also useful as for learning SQL and sharing SQL queries as a ‘reference database’.

We are redirecting all old links to the new path, so everything should work as before. Why did we make this change?

Mostly because we decided to move off the Windows Azure platform. While Microsoft generously offered us free Azure hosting in exchange for odata support and a small “runs on Azure” logo in the footer, it ultimately did not offer the level of control that we needed. I’ll let Sam Saffron, the principal developer of SEDE, explain:

Teething issues

When we first started working with Azure, tooling was very rough. Tooling for Visual Studio and .NET 4.0 support only appeared a month after we started development. Remote access to Azure instances was only granted a few weeks ago together with the ability to run non-user processes.

There are still plenty of teething issues left, for example: on the SQL Azure side we can’t run cross database queries, add full-text indexes or backup our dbs using the

BACKUPcommand. I am sure these will eventually be worked out. There’s also the 30 minute deploy cycle. Found a typo on the website? Correcting it is going to take 30 minutes, minimum.Due to many of these teething issues, debugging problems with our Azure instances quickly became a nightmare. I spent days trying to work out why we were having uptime issues, which since have been mostly sorted.

It is important to note that these issues are by no means specific to Azure; similar teething issues affect other Platform-As-A-Service providers such as Google App Engine and Heroku. When you are using a PAAS you are giving up a lot of control to the service provider. The service provider chooses which applications you can run and imposes a series of restrictions.

The life cycle of a data dump

Whenever there is a new data dump, I would log on to my Rackspace instance, download the data dump, decompress it, rename a bunch of folders, run my database importer, and wait an hour for it to load. If there were any new sites, I would open up a SQL window and hack that into the DB. This process was time consuming and fairly tricky to automate. It could be automated, but it would require lots of work from our side.

Now that we migrated to servers we control, the process is almost simple — all we do is select a bunch of data from export views (containing public data) and insert them into a fresh DB. We are not stuck coordinating work between 4 machines across 3 different geographical locations.

Did I mention we are control freaks?

At Stack Overflow we take pride in our servers. We spend weeks tweaking our hardware and software to ensure we get the best performance and in turn you, the end user, get the most awesome experience.

It was disorienting moving to a platform where we had no idea what kind of hardware was running our app. Giving up control of basic tools and processes we use to tune our environment was extremely painful.

We thank Microsoft for letting us try out Azure; based on our experience, we’ve given them a bunch of hopefully constructive feedback. In the long run, we think a self-hosted solution will be much simpler for us to maintain, tune and automate.

There’s also few other bits (nibbles?) of data news:

- We won’t be producing a data dump for the month of December 2010, but you can definitely expect one just after the new year. We apologize for the delay.

- SEDE will continue to be updated monthly as a matter of policy to keep it in sync with the monthly data dumps.

Remember, SEDE is fully open source, so if you want to help us hack on it, please do!

code.google.com/p/stack-exchange-data-explorer

And as usual, if you have any bugs or feedback for us, leave it in in the [data-explorer] tag on meta, too.

Defending Attribution Required

All content contributed to the Stack Exchange network is licensed under cc-wiki (aka cc-by-sa).

What does this mean? In short, it’s a way of guaranteeing that we can’t ever do anything nefarious with the questions and answers the community have so generously shared with us. It’s not unheard of for some companies to arbitrarily decide that giving content back to the community is, er … well, let’s just say … not in their best commercial interests. Then they suddenly pull the rug out from under the very people that contributed the content that made them viable in the first place.

We wouldn’t want that done to us. And there’s no way we’re doing it to our community. To prove it, we adopted a licensing scheme that makes it impossible for us to do anything even partially-quasi-evil with our community’s content. Namely, cc-by-sa (aka cc-wiki), which gives everyone the following rights to all Stack Exchange data:

You are free:

- to Share— to copy, distribute and transmit the work

- to Remix — to adapt the work

Under the following conditions:

- Attribution — You must attribute the work in the manner specified by the author or licensor(but not in any way that suggests that they endorse you or your use of the work).

- Share Alike — If you alter, transform, or build upon this work, you may distribute the resulting work only under the same or similar license to this one.

This isn’t news, of course; it’s explained on the footer of every web page we serve. And note that we explicitly allow commercial usage — after all, we’re a commercial entity, so it felt only sporting to allow others the same rights we enjoyed.

What is news, is this: lately we’re getting a lot of reports of sites reposting our content (which is totally cool, and explicitly allowed), but not attributing it correctly … which is most decidedly not cool.

What are our attribution requirements?

Let me clarify what we mean by attribution. If you republish this content, we require that you:

- Visually indicate that the content is from Stack Overflow, Meta Stack Overflow, Server Fault, or Super Userin some way. It doesn’t have to be obnoxious; a discreet text blurb is fine.

- Hyperlink directly to the original questionon the source site (e.g., http://stackoverflow.com/questions/12345)

- Show the author namesfor every question and answer

- Hyperlink each author name directly back to their user profile page on the source site (e.g., http://stackoverflow.com/users/12345/username)

By “directly”, I mean each hyperlink must point directly to our domain in standard HTML visible even with JavaScript disabled, and not use a tinyurl or any other form of obfuscation or redirection. Furthermore, the links must not be nofollowed.

They’re not complicated, nor are these attribution requirements particularly hard to find: they’re linked from the footer of every web page we serve, and included as a plaintext file in every public data dump we share.

We’ve been collecting a list of sites that are reposting our data without attributing it correctly — but it’s becoming something of an epidemic lately. Every other day now I get an email or meta report about a real live web search where someone found content that is clearly ripped off, has zero useful attribution, and a bucket of greasy, slimy ads slathered all over it to boot.

I’m starting to get fed up with these sites. Not because they’re abusing our website, but because they’re abusing you guys, our community — by reposting your questions and your answers with no attribution! The whole point of Stack Overflow, Server Fault, Super User, and every other Stack Exchange site is to give credit directly to the talented people providing all these fantastic answers. When a scraper site rips a great answer, removes all attribution and context, plasters it with cheap ads — and it shows up in a public web search result, as they increasingly do — everyone loses.

I’m not going to stand for this, at least not without a fight. We’re starting to email these sites and ask them very politely to please follow our simple attribution guidelines.

And if they don’t follow our simple attribution requirements when we’ve asked them nicely, well — we’re going to start asking them not so nicely. Namely, we will hit them where it hurts, in the pocketbook. Our pal How-to-Geek explains:

For the quickest results, you can send the DMCA to their web host, which you can generally figure out with whoishostingthis.com. Every single legit hosting center will have a “legal” or “copyright” page, and they will have a specific way to send in DMCA requests. Some of them require fax, though many are starting to accept email instead… and they will often have the content removed almost instantly. WordPress.com will instantly cancel their entire account, and other hosts tend to take very swift action, often disabling their whole site until they comply.

If you really want to cause them some pain, however, you can send the DMCA to their advertisers. Adsense is usually the first target for this, since so many of the jerks are using it. The only problem with Adsense is they require a DMCA fax.

There’s been once or twice where I’ve found a site that was hosted somewhere that doesn’t care about copyright… but every single ad network of any value is based in the US, and the jerk website owner isn’t going to mess around with their income stream.

Please help us defend your right to have your name and source attached to the content you’ve so generously contributed to our sites. We will absolutely do our part, but many hands make light work:

- Whenever you find a new site that is using our data without proper attribution, check this meta question and make sure it’s listed.

- If you have contact information for the site that is inappropriately using our content, forward it to us at [email protected] for action.

- If you’re feeling a bit miffed about the whole situation, don’t hesitate to forward a link to our attribution guidelines to the site operators, or their ISP, and briefly indicate specifically where they are not following them. Squeaky wheel gets the grease, and all that.

- If the site is wrapping the content in invasive ads that attempt to redirect the user or compromise their web experience in some way, I encourage you to report it at http://www.google.com/safebrowsing/report_badware/ ; I’m only adding this because it happened recently (!).

I’m always happy for our content to get remixed and reused, but at some point we have to start defending our attribution guidelines, or we are failing the community who trusted us with their content in the first place.

After all, if we don’t stick up for what’s right, and what’s fair — who will?

Subscribe via RSS

Subscribe via RSS